7 Principle of maximum likelihood

7.1 Overview

Outline of maximum likelihood estimation

Maximum likelihood is a very general method for fitting probabilistic models to data, generalising the earlier method of least-squares. Consequently, it plays a very important role in statistics.

In a nutshell, the starting points in a maximum likelihood analysis are

- the observed data \(D = \{x_1,\ldots,x_n\}\) with \(n\) independent and identically distributed (iid) samples, with the ordering irrelevant, and a

- a model \(P(\boldsymbol \theta)\) with corresponding probability density or probability mass function \(p(x|\boldsymbol \theta)\) and parameters \(\boldsymbol \theta\)

From model and data the likelihood function (note upper case “L”) is constructed as \[ L_n(\boldsymbol \theta) = L(\boldsymbol \theta|D)=\prod_{i=1}^{n} p(x_i|\boldsymbol \theta) \] Equivalently, the log-likelihood function (note lower case “\(\ell\)”) is \[ \ell_n(\boldsymbol \theta) = \ell(\boldsymbol \theta|D)=\sum_{i=1}^n \log p(x_i|\boldsymbol \theta) \] In the above notation, we either explitly mention the data \(D\) or use an index to remind us of the sample size (here \(n\)).

The likelihood is multiplicative and the log-likelihood additive over the samples \(x_i\) because of the iid assumption.

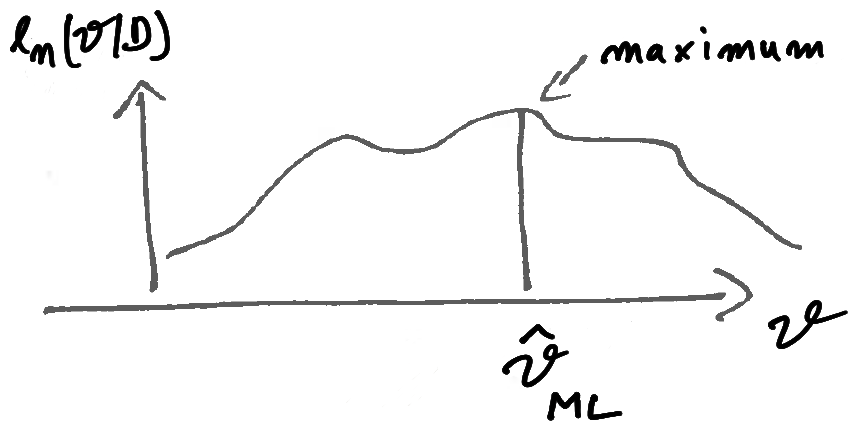

The maximum likelihood estimate (MLE) \(\hat{\boldsymbol \theta}^{ML}\) is then found by maximising the (log)-likelihood function with regard to the parameters \(\boldsymbol \theta\) (see Figure 7.1): \[ \hat{\boldsymbol \theta}_{ML} = \underset{\boldsymbol \theta}{\arg \max}\, \ell_n(\boldsymbol \theta) \]

Hence, once the model is chosen and data are collected, finding the MLE and thus fitting the model to the data is an optimisation problem.

Depending on the complexity of the likelihood function and the number of the parameters finding the maximum likelihood can be very difficult. On the other hand, for likelihood functions constructed from common distribution families, such as exponential families, maximum likelihood estimation is very straightforward and can even be done analytically (this is the case for most examples we encounter in this course).

In practice in application to more complex models the optimisation required for maximum likelihood analysis is done on the computer, typically on the log-likelihood rather than on the likelihood function in order to avoid problems with the computer representation of small floating point numbers. Suitable optimisation algorithm may rely only on function values without requiring derivatives, or use in addition gradient and possibly curvature information. In recent years there has been a lot of progress in high-dimensional optimisation using combined numerical and analytical approaches (e.g. using automatic differentiation) and stochastic approximations (e.g. stochastic gradient descent).

Origin of the method of maximum likelihood

Historically, the likelihood has often been interpreted and justified as the probability of the data given the model. However, while providing an intuitive understanding this is not strictly correct. First, this interpretation only applies to discrete random variables. Second, since the samples \(x_1, \ldots, x_n\) are typically exchangeable (i.e. permutation invariant) even in this case one would still need to add a factor accounting for the multiplicity of the possible orderings of the samples to obtain the correct probability of the data. Third, the interpretation of likelihood as probability of the data completely breaks down for continuous random variables because in that case \(p(x |\boldsymbol \theta)\) is a density, not a probability.

Next, we will see that maximum likelihood estimation is a well-justified method that arises naturally from an entropy perspective. More specifically, the maximum likelihood estimate corresponds to the distribution \(P(\boldsymbol \theta)\) that is closest in terms of KL divergence to the unknown true data-generating model as represented by the observed data and the empirical distribution.

7.2 From minimum KL divergence to maximum likelihood

The KL divergence between true model and approximating model

Assume we have observations \(D = \{x_1, \ldots, x_n\}\). The data are sampled from \(Q\), the true but unknown data-generating distribution. We also specify a family of distributions \(P(\boldsymbol \theta)\) indexed by \(\boldsymbol \theta\) to approximate \(Q\).

The KL divergence \(D_{\text{KL}}(Q,P(\boldsymbol \theta))\) measures the divergence of the approximation \(P(\boldsymbol \theta)\) from the unknown true model \(Q\). It can be written as \[ \begin{split} D_{\text{KL}}(Q,P(\boldsymbol \theta)) &= H(Q,P(\boldsymbol \theta)) - H(Q) \\ &= \underbrace{- \operatorname{E}_{Q}\log p(x| \boldsymbol \theta)}_{\text{mean log-loss}} -(\underbrace{-\operatorname{E}_{Q}\log q(x)}_{\text{entropy of $Q$, does not depend on $\boldsymbol \theta$}})\\ \end{split} \]

However, since we do not know \(Q\) we cannot actually compute this divergence. Nonetheless, we may use the empirical distribution \(\hat{Q}_n\) — a function of the observed data — as approximation for \(Q\), and in this way we arrive at an approximation for \(D_{\text{KL}}(Q,P(\boldsymbol \theta))\) that becomes more and more accurate with growing sample size.

Recall the “Law of Large Numbers” :

The empirical distribution \(\hat{Q}_n\) based on observed data \(D=\{x_1, \ldots, x_n\}\) converges strongly (almost surely) to the true underlying distribution \(Q\) as \(n \rightarrow \infty\): \[ \hat{Q}_n\overset{a. s.}{\to} Q \]

Correspondingly, for \(n \rightarrow \infty\) the average \(\operatorname{E}_{\hat{Q}_n}(h(x)) = \frac{1}{n} \sum_{i=1}^n h(x_i)\) converges to the expectation \(\operatorname{E}_{Q}(h(x))\).

Hence, for large sample size \(n\) we can approximate the mean log-loss and as a result the KL divergence. The mean log-loss \(H(Q, P(\boldsymbol \theta))\) is approximated by the empirical mean log-loss where the expectation is taken with regard to \(\hat{Q}_n\) rather than \(Q\) (see Example 4.3): \[ \begin{split} H(Q, P(\boldsymbol \theta)) & \approx H(\hat{Q}_n, P(\boldsymbol \theta)) \\ & = -\frac{1}{n} \ell_n ({\boldsymbol \theta}) \end{split} \] The empirical risk is equal to the negative log-likelihood standardised by the sample size \(n\).

Minimum KL divergence and maximum likelihood

If we knew \(Q\) we would simply minimise \(D_{\text{KL}}(Q, P(\boldsymbol \theta))\) to find the particular model \(P(\boldsymbol \theta)\) that is closest to the true model, or equivalently, we would minimise the mean log-loss \(H(Q, P(\boldsymbol \theta))\). However, since we actually don’t know \(Q\) this is not possible.

However, for large sample size \(n\) when the empirical distribution \(\hat{Q}_n\) is a good approximation for \(Q\), we can use the results from the previous section. Thus, instead of minimising the KL divergence \(D_{\text{KL}}(Q, P(\boldsymbol \theta))\) we simply minimise \(H(\hat{Q}_n, P(\boldsymbol \theta))\) which is the same as maximising the log-likelihood \(\ell_n ({\boldsymbol \theta})\).

Conversely, this implies that maximising the likelihood with regard to the \(\boldsymbol \theta\) is equivalent ( asymptotically for large \(n\)!) to minimising the KL divergence of the approximating model and the unknown true model.

\[ \begin{split} \hat{\boldsymbol \theta}_{ML} &= \underset{\boldsymbol \theta}{\arg \max}\,\, \ell_n(\boldsymbol \theta) \\ &= \underset{\boldsymbol \theta}{\arg \min}\,\, H(\hat{Q}_n, P(\boldsymbol \theta)) \\ &\approx \underset{\boldsymbol \theta}{\arg \min}\,\, D_{\text{KL}}(Q, P(\boldsymbol \theta)) \\ \end{split} \]

Therefore, the reasoning behind the method of maximum likelihood is that it minimises a large sample approximation of the KL divergence of the candidate model \(P(\boldsymbol \theta)\) from the unkown true model \(Q\). In other words, maximum likelihood estimators are minimum empirical KL divergence estimators.

As the KL divergence is a functional of the true distribution \(Q\) maximum likelihood provides empirical estimators for parametric models.

As a consequence of the close link of maximum likelihood and KL divergence maximum likelihood inherits for large \(n\) (and only then!) all the optimality properties from KL divergence.

7.3 Properties of maximum likelihood estimation

Consistency of maximum likelihood estimates

One important property of the method of maximum likelihood is that in general it produces consistent estimates. This means that estimates are well behaved so that they become more accurate with more data and in the limit of infinite data converge to the true parameters.

Specifically, if the true underlying model \(Q\) is contained in the set of specified candidates models \(P(\boldsymbol \theta)\) \[ \underbrace{Q}_{\text{true model}} \subset \underbrace{P(\boldsymbol \theta)}_{\text{specified models}} \] there is a parameter \(\boldsymbol \theta_{\text{true}}\) for which \(Q = P(\boldsymbol \theta_{\text{true}})\).

Maximisation of the likelihood function is, for large \(n\), equivalent to minimising the KL divergence, and \(D_{\text{KL}}(Q,P(\boldsymbol \theta))\rightarrow 0\) implies \(P(\boldsymbol \theta)\rightarrow Q\), hence \[ \hat{\boldsymbol \theta}_{ML} \overset{\text{large }n}{\longrightarrow} \boldsymbol \theta_{\text{true}} \] Thus, given sufficient data, the maximum likelihood estimate of the model parameters will converge to the true parameter values. Note that the above assumes the model family, and therefore the number of parameters, is fixed.

As a consequence of consistency, maximum likelihood estimates are asympotically unbiased. As we will see in the examples, they can still be biased in finite samples.

Note that even if the candidate model family \(P(\boldsymbol \theta)\) is misspecified (so that it does not contain the actual true model), the maximum likelihood estimate is still optimal, for large sample size, in the sense in that it will identify the distribution in the family that is closest in terms of KL divergence.

Finally, inconsistent maximum likelihood estimates can occur, but only in nonregular situations, for example when the MLE lies on a boundary or when there are singularities in the likelihood function. Furthermore, inconsistency can arise when the number of parameters grows with the sample size and hence the information per parameter does not sufficiently increase (as in the well-known Neyman–Scott paradox).

Invariance property of the maximum likelihood

Maximum likelihood is invariant under reparametrisation, i.e. under transformation of the model parameters the achieved maximum likelihood stays the same. This property is shared by KL divergence minimisation as the achieved minimum KL divergence is also invariant under a change of parameters.

A parameter is just an arbitrary label to index a specific distribution within a distribution family, and changing that label does not affect the maximum (likelihood) or the minimum (KL divergence). For example, consider a function \(h_x(x)\) with a maximum at \(x_{\max} = \text{arg max } h_x(x)\). Now relabel the argument using \(y = g(x)\) where \(g\) is an invertible function. Then the function in terms of \(y\) is \(h_y(y) = h_x( g^{-1}(y))\). Clearly, this function has a maximum at \(y_{\max} = g(x_{\max})\) since \(h_y(y_{\max}) = h_x(g^{-1}(y_{\max} ) ) = h_x( x_{\max} )\). Furthermore, the achieved maximum value is the same.

In application to maximum likelihood, assume we transform a parameter \(\theta\) into another parameter \(\omega\) using an invertible function so that \(\omega= g(\theta)\). Then the maximum likelihood estimate \(\hat{\omega}_{ML}\) of the new parameter \(\omega\) is simply the transformation of the maximum likelihood estimate \(\hat{\theta}_{ML}\) of the original parameter \(\theta\) with \(\hat{\omega}_{ML}= g( \hat{\theta}_{ML})\). The achieved maximum likelihood is the same in both cases.

The invariance property can be very useful in practice because it is often easier (and sometimes numerically more stable) to maximise the likelihood using a different set of parameters.

See Worksheet L1 for an example application of the invariance property.

Sufficient statistics

Another important concept are sufficient statistics to summarise the information available in the data about a parameter in a model.

A statistic \(\boldsymbol t(D)\) is called a sufficient statistic for the model parameters \(\boldsymbol \theta\) if the corresponding likelihood function can be written using only \(\boldsymbol t(D)\) in the terms that involve \(\boldsymbol \theta\) such that \[ L(\boldsymbol \theta| D) = h( \boldsymbol t(D) , \boldsymbol \theta) \, k(D) \,, \] where \(h()\) and \(k()\) are positive-valued functions. This is known as the Fisher-Pearson factorisation. Equivalently on log-scale this becomes \[ \ell(\boldsymbol \theta| D) = \log h( \boldsymbol t(D) , \boldsymbol \theta) + \log k(D) \,. \]

By construction, estimation and inference about \(\boldsymbol \theta\) based on the factorised likelihood \(L(\boldsymbol \theta)\) is mediated through the sufficient statistic \(\boldsymbol t(D)\) and does not require knowledge of the original data \(D\). Instead, the sufficient statistic \(\boldsymbol t(D)\) contains all the information in \(D\) required to learn about the parameter \(\boldsymbol \theta\).

Note that a sufficient statistic always exists since the data \(D\) are themselves sufficient statistics, with \(\boldsymbol t(D) = D\). However, in practice one aims to find sufficient statistics that summarise the data \(D\) and hence provide data reduction. This will become clear in the examples below.

Furthermore, sufficient statistics are not unique since applying a one-to-one transformation to \(\boldsymbol t(D)\) yields another sufficient statistic.

Therefore, if the MLE \(\hat{\boldsymbol \theta}_{ML}\) of \(\boldsymbol \theta\) exists and is unique then the MLE is a unique function of the sufficient statistic \(\boldsymbol t(D)\). If the MLE is not unique then it can be chosen to be function of \(\boldsymbol t(D)\).

7.4 Maximum likelihood estimation for regular models

Regular models

A regular model is one that is well-behaved and well-suited for model fitting by optimisation. In particular this requires that:

the support does not depend on the parameters,

the model is identifiable (in particular the model is not over-parametrised and has a minimal set of parameters),

the density/probability mass function and hence the log-likelihood function is twice differentiable everywhere with regard to the parameters,

the maximum (peak) of the likelihood function lies inside the parameter space and not at a boundary,

the second derivative of the log-likelihood at the maximum is negative and not zero (for multiple parameters: the Hessian matrix at the maximum is negative definite and not singular)

Most models considered in this course are regular.

Maximum likelihood estimation in regular models

For a regular model maximum likelihood estimation and the necessary optimisation is greatly simplified by being able to using gradient and curvature information.

In order to maximise \(\ell_n(\boldsymbol \theta)\) one may use the score function \(\boldsymbol S_n(\boldsymbol \theta)\) which is the first derivative of the log-likelihood function with regard to the parameters 1.

\[\begin{align*} \begin{array}{cc} S_n(\theta) = \frac{d \ell_n(\theta)}{d \theta}\\ \\ \boldsymbol S_n(\boldsymbol \theta)=\nabla \ell_n(\boldsymbol \theta)\\ \end{array} \begin{array}{ll} \text{scalar parameter $\theta$: first derivative}\\ \\ \text{multivariate parameter $\boldsymbol \theta$: gradient}\\ \end{array} \end{align*}\]

In this case a necessary (but not sufficient) condition for the MLE is that \[ \boldsymbol S_n(\hat{\boldsymbol \theta}_{ML}) = 0 \]

To demonstrate that the log-likelihood function actually achieves a maximum at \(\hat{\boldsymbol \theta}_{ML}\) the curvature at the MLE must negative, i.e. that the log-likelihood must be locally concave at the MLE.

In the case of a single parameter (scalar \(\theta\)) this requires to check that the second derivative of the log-likelihood function with regard to the parameter is negative: \[

H_n(\theta) = \frac{d^2 \ell_n(\theta)}{d \theta^2}

\] and

\[H_n(\hat{\theta}_{ML} ) < 0\]

In the case of a parameter vector (multivariate \(\boldsymbol \theta\)) one first computes the Hessian matrix (matrix of second order derivatives) of the log-likelihood function \[ \boldsymbol H_n(\boldsymbol \theta) = \nabla \nabla^T \ell_n(\boldsymbol \theta) \] For a multivariate parameter vector \(\boldsymbol \theta\) of dimension \(d\) the Hessian is a matrix of size \(d \times d\). Then one needs to verify that the Hessian matrix is negative definite at the MLE: \[ \boldsymbol H_n(\hat{\boldsymbol \theta}_{ML}) < 0, \] i.e. all its eigenvalues must be negative.

Invariance of score function and second derivative of the log-likelihood

The score function \(\boldsymbol S_n(\boldsymbol \theta)\) is invariant under a transformation of the sample space. Assume \(\boldsymbol x\) has log-density \(\log f_{\boldsymbol x}(\boldsymbol x| \boldsymbol \theta)\) then the log-density for \(\boldsymbol y\) is \[ \log f_{\boldsymbol y}(\boldsymbol y| \boldsymbol \theta) = \log |\det\left( D\boldsymbol x(\boldsymbol y) \right)| + \log f_{\boldsymbol x}\left( \boldsymbol x(\boldsymbol y)| \boldsymbol \theta\right) \] where \(D\boldsymbol x(\boldsymbol y)\) is the Jacobian matrix of the inverse transformation \(\boldsymbol x(\boldsymbol y)\). When taking the derivative of the log-likelihood function with regard to the parameter \(\boldsymbol \theta\) the first term containing the Jacobian determinant vanishes. Hence the score function \(\boldsymbol S_n(\boldsymbol \theta)\) is not affected by a change of variables.

As a consequence, the second derivative of log-likelihood function with regard to \(\boldsymbol \theta\) is also invariant under transformations of the sample space.

7.5 Further reading

The developments that lead to the theory of maximum likelihood are reviewed, e.g., in Aldrich (1997), Stiegler (2007) and Hand (2015).

See Kullback (1959) for a view of maximum likelihood and corresponding statistical procedures in terms of minimising KL divergence.

The method of maximum likelihood as unified statistical theory was developed by Ronald A. Fisher in the early 20th century. A key seminal paper is Fisher (1922).

The equivalence of maximum likelihood estimation and minimisation of Kullback–Leibler divergence was first made explicit in the 1950s, see Kullback (1959).

The score function \(\boldsymbol S_n(\boldsymbol \theta)\) as the gradient of the log-likelihood function must not be confused with the scoring rule \(S(x, P)\).↩︎